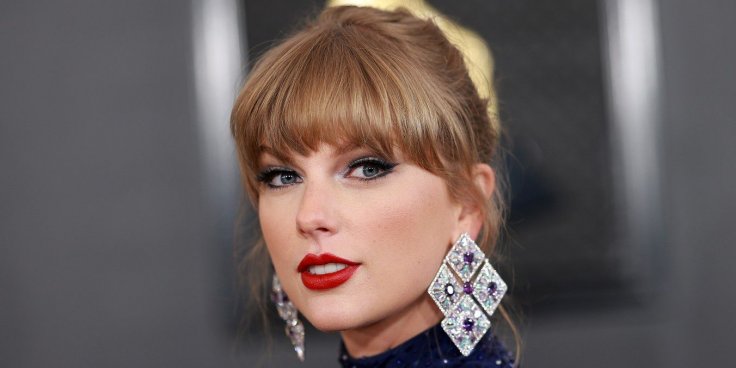

Pornographic deepfake images of Taylor Swift circulating on social media last month were traced back to an online challenge aimed at circumventing safety measures designed to prevent the creation of lewd images using artificial intelligence (AI) as reported in Bloomberg. This revelation comes from Graphika, a social network analysis company.

According to Graphika's senior analyst Cristina López G., the proliferation of these images has not only drawn mainstream attention to the issue of AI-generated non-consensual intimate images but has also shed light on the broader vulnerability of public figures to such malicious practices. "While viral pornographic pictures of Taylor Swift have brought mainstream attention to the issue of AI-generated non-consensual intimate images, she is far from the only victim," said López G. in an email statement. "In the 4chan community where these images originated, she isn't even the most frequently targeted public figure. This shows that anyone can be targeted."

The dissemination of these Swift deepfake images has prompted significant political response, with the White House issuing a statement condemning their circulation. Additionally, Congressman Joe Morelle, a Democrat from New York, has expressed his intention to use this incident to draw attention to legislative efforts aimed at criminalizing deepfake pornography at the federal level, imposing fines and potential jail time.

It's worth noting that Taylor Swift is just one of several celebrities whose likeness has been exploited to create pornographic or violent deepfake images on platforms like 4chan. Other celebrities targeted include Billie Eilish, Ariana Grande, and Emma Watson. Despite efforts to reach out for comment, representatives for Swift, Eilish, Grande, and Watson have not responded.

As of January 30, requests for Swift deepfakes were still being made, including one seeking violent imagery involving her. While celebrity deepfake pornography has been available on various internet platforms for years, experts highlight a concerning trend of rising cases involving ordinary individuals. This trend is fueled by advancements in technology that make deepfake creation more accessible and sophisticated. Victims often find themselves with limited options for recourse, struggling to have the images removed from the internet.