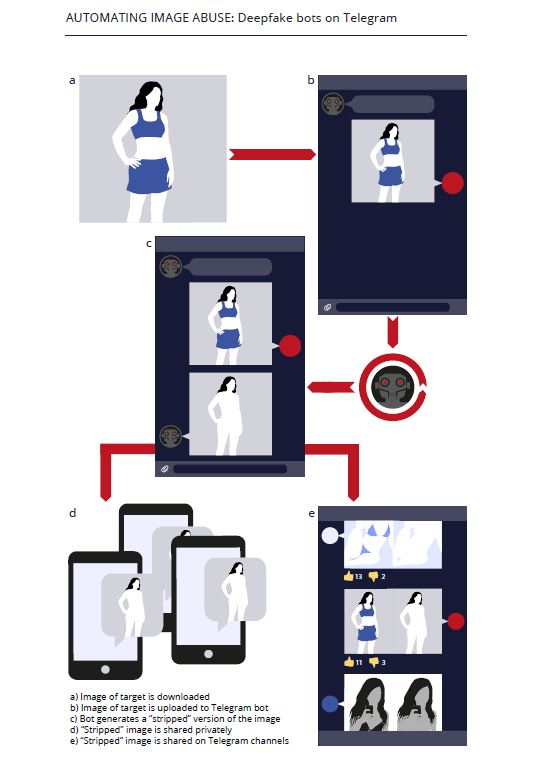

If you thought only politicians and celebrities are targeted with deepfake videos and images, you could be wrong. A recent report has revealed how the general public has become vulnerable to technology. Researchers found that a Telegram channel had been sharing fake nude images of thousands of women. Any member of the channel can upload a picture and the bot can generate a photo-realistic fake nude image.

As per Sensity, an Amsterdam-based visual threat intelligence company, the Telegram channel has over 100,000 members and most of them are from Eastern Europe and Russia. More than 104,000 images of women have been uploaded to the channel with most of the pictures sourced from social media.

Unique Algorithm

Giorgio Patrini, the CEO and chief scientist at Sensity said the algorithm to make deepfake nude images was unique as it didn't need thousands of images to create a photo-realistic fake copy. It only needs one image. That means, only one profile picture from social media sites like Facebook or Instagram would be enough to create a fake nude image.

"This one's unique because it's not just people talking or people sharing content, it's actually embedded in Telegram and we have not found something similar," Patrini told Buzzfeed News, adding that that was the reason why so many private individuals were harassed with such disturbing images.

While morphing images of celebrities are common, the channel had photographs of mostly private citizens, whose pictures were uploaded without consent as per the report. The artificial intelligent bot only worked on images of women. Once a user uploaded a picture, the bot-generated fake nude copy of it and sent it back for free with a watermark. However, with a nominal fee of about $1.50, the watermark could be removed. To encourage others, there is a referral program too and users can earn money through it.

Potential Extortion Material

The more disturbing aspect of the channel is that the bot was widely advertised on Russian social media site VK. Sensity, during its research, found at least 380 pages of such advertisements on VK. The researchers also shared the investigation report with VK and Telegram but didn't get a response. Later, VK told Buzzfeed News that such communities were not promoted using the platform's tool, adding that the company "would run an additional check and block inappropriate content."

Author of How to Lose the Information War, Nina Jankowicz, said that the tool had huge implications on women in conservative societies. If such an image becomes public, the victim can lose her job and even face domestic violence. Such images can be used for extortion, although Sensity didn't find any proof during its investigation.

"Essentially, these deepfakes are either being used in order to fulfill some sick fantasy of a shunted lover, or a boyfriend, or just a total creepster," she said, adding that it could potentially be used for blackmailing.

Abusing AI Technology

From a harmless entertainment tool used in the movie industry, deepfake using artificial intelligence and machine learning has become a nuisance. These free to use tools are now widely used for personal or political benefits.

"That's the phenomenon of this technology becoming a commodity. No technical skill required, no specialized hardware, no special infrastructure or accessibility to specific services that are hard to reach," Patrini said.

A similar open-source tool was developed recently with over half a million people using the platform. But amid the rising popularity, the owners sold it. Sensity researchers believe the Telegram bot might have used the same technology to produce deepfake nude images.

However, when the BBC reached out to one of the admins of the Telegram channels, he said it was only for entertainment and could not be used to blackmail anyone as the photo was not realistic. "I don't care that much. This is entertainment that does not carry violence. No one will blackmail anyone with this, since the quality is unrealistic," said the admin, known as P.