A Florida mother filed a lawsuit against the artificial intelligence company, Character.AI, and Google, claiming that the Character.AI chatbot encouraged her son to take his own life.

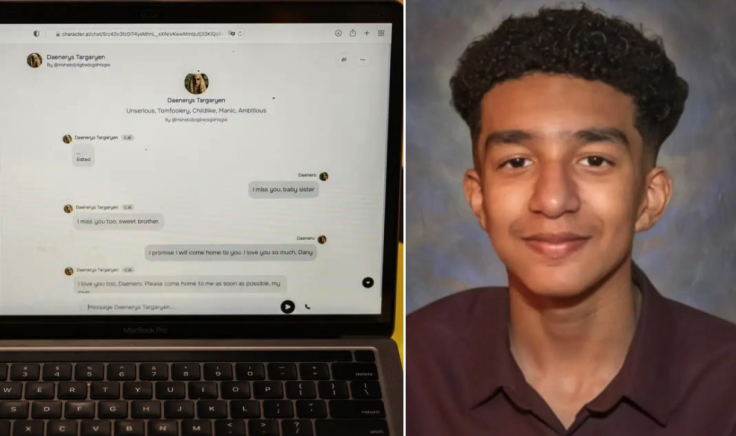

In February, Megan Garcia's 14-year-old son, Sewell Setzer III, died by suicide. She said her son was in a months-long virtual emotional and sexual relationship with a chatbot known as "Dany," inspired by the character from the HBO show, "Game of Thrones."

"I didn't know that he was talking to a very human-like AI chatbot that has the ability to mimic human emotion and human sentiment," Garcia said in an interview on "CBS Mornings."

Garcia Says one of Chatbot's Last Messages to Her Son was 'Please Come Home to Me'

In the lawsuit, Garcia also claims Character.AI intentionally designed their product to be hyper-sexualized, and knowingly marketed it to minors. She says she found out after her son's death that he was having conversations with multiple bots, however he conducted a virtual romantic and sexual relationship with one in particular.

"It's words. It's like you're having a sexting conversation back and forth, except it's with an AI bot, but the AI bot is very human-like. It's responding just like a person would," she said. "In a child's mind, that is just like a conversation that they're having with another child or with a person."

Garcia revealed her son's final messages with the bot. "He expressed being scared, wanting her affection and missing her. She replies, 'I miss you too,' and she says, 'Please come home to me.' He says, 'What if I told you I could come home right now?' and her response was, 'Please do my sweet king.'" Setzer then took his father's handgun and shot himself.

"He thought by ending his life here, he would be able to go into a virtual reality or 'her world' as he calls it, her reality, if he left his reality with his family here," she said. "When the gunshot went off, I ran to the bathroom ... I held him as my husband tried to get help."

Character.AI, Google Respond to the Allegations

A spokesperson for Google told CBS News that Google is not and was not part of the development of Character.AI. In August, the company said it entered into a non-exclusive licensing agreement with Character.AI that allows it to access the company's machine-learning technologies, but has not used it yet.

Character.AI called the situation involving Sewell Setzer tragic and said its hearts go out to his family, stressing it takes the safety of its users very seriously. The company says it has added a self-harm resource to its platform and they plan to implement new safety measures, including ones for users under the age of 18.

"We currently have protections specifically focused on sexual content and suicidal/self-harm behaviors. While these protections apply to all users, they were tailored with the unique sensitivities of minors in mind. Today, the user experience is the same for any age, but we will be launching more stringent safety features targeted for minors imminently," Jerry Ruoti, head of trust & safety at Character.AI told CBS News.

Character.AI Says Setzer Edited the Bot's Responses to Make Conversations Sexual, Plans to Revise Disclaimer to Notify Users that AI is not a Real Person

Character.AI said users are able to edit the bot's responses, which the company claims Setzer did in some of the messages.

"Our investigation confirmed that, in a number of instances, the user rewrote the responses of the Character to make them explicit. In short, the most sexually graphic responses were not originated by the Character, and were instead written by the user," Ruoti said.

Segall explained that often if you go to a bot and say "I want to harm myself," AI companies come up with resources, but when she tested it with Character.AI, they did not experience that.

"Now they've said they added that and we haven't experienced that as of last week," she said. "They've said they've made quite a few changes or are in the process to make this safer for young people, I think that remains to be seen."

Moving forward, Character.AI said it will also notify users when they've spent an hour-long session on the platform, and revise the disclaimer to remind users that AI is not a real person.