One Plus revealed one of the coolest features they are working on with the new OnePlus 7T pro, at the launch event in London. The device comes with the latest Oxygen OS 10 and Android 10 version.

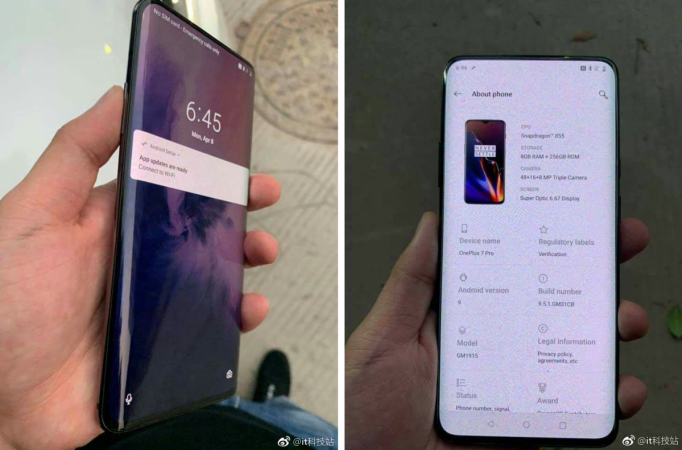

When it comes to the processor, there is a small upgrade over its earlier 7 pro version. The OnePlus 7T pro comes with a Snapdragon 855+ processor and 12GB RAM, whereas the OnePlus 7 pro possesses Snapdragon 855 chipset with 6/8/12 GB RAM. Amidst, there is one feature that caught the attention of the crowd at the event.

Carl Pei, the co-founder of OnePlus, along with other features, announced the Instant Translate feature, which allows users of the OnePlus community to stay connected and converse with ease.

This feature tries to quell the communication barriers and language issues, where you can talk to any person globally with ease and not worry about the language you speak.

For instance, you are making a video call to your friend, who speaks a different jargon. The Instant translate feature allows you to see the subtitles in your native language on the screen while the video call continues, and everything happens in real-time.

Earlier, Google launched an identical feature called Live Caption for Android 10. This feature allowed users to transcribe video and audio notes in real-time, where the captions are shown on the screen. Here, the users had the choice to read-only the caption by muting audio or listen to the content and read the caption simultaneously. However, this feature is likely helpful to users who are facing hearing disabilities.

We have seen a similar translate feature with Google Pixel earbud headphones, where it supports translating 40 languages in real-time. All you need is an Android phone, an updated version of Google translate app, and earbud headphones by Google Pixel.

Phones like Honor Magic 2 also has a similar feature, where it transcribes your call talks in real-time while you are still on the call.

However, talking about the Instant Translate, this feature is awesome. But, as of now, nothing is prognosticated about this feature. So wait till this spec rolls out and let users do the rest.