Nearly 18 million people die of cardiovascular diseases (CVDs) globally and in the U.S., the number is one in eight deaths, making it one of the major causes of concern. While many factors can lead to a heart attack, early detection of the root cause can not only save a life but also huge medical expenses.

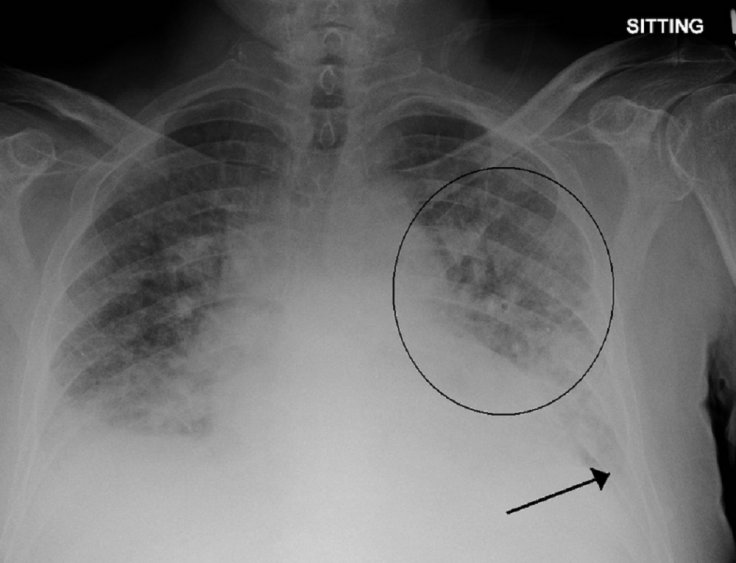

Among many warning signs of a heart attack, pulmonary edema or excess fluid in the lungs is one. By correctly detecting pulmonary edema, a heart attack can be prevented. But the problem is that the exact level of excess fluid buildup is difficult to detect at an early stage as doctors and radiologists tend to depend on X-rays that are sometimes inconclusive. Hence, it becomes tough for doctors to come up with a treatment plan.

AI Tool to Rescue

However, with the advent of technology, doctors see the change. As artificial intelligence technology (AI) progresses, scientists believe that it can be harnessed to detect early signs of heart attack, especially pulmonary edema. Considering it is difficult for even seasoned radiologists to detect signs of excess fluid build-up in the lungs, scientists at MIT's Computer Science and Artificial Intelligence Lab (CSAIL) have come up with an AI-based tool that can "quantify how severe the edema is."

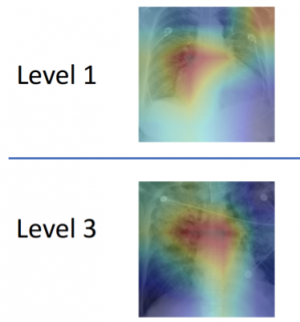

The machine-learning model will look into X-rays to rate the edema from 0 (healthy) to 3 (very, very bad). By determining the exact level, it will help doctors to treat the condition early and prevent heart attacks. During the tests, the tool made correct detection of level 3 edema in 90 percent of the cases while more than half of the time, it could determine the right level.

The AI-based detection tool will be implemented at the Beth Israel Deaconess Medical Center (BIDMC) and technology giant Philips's research center. There are also plans to integrate the AI model into BIDMC's emergency room workflow by December.

"This project is meant to augment doctors' workflow by providing additional information that can be used to inform their diagnoses as well as enable retrospective analyses," said Ruizhi Liao, co-lead author of the paper that was published in August.

How Was It Developed?

To train an AI algorithm, researchers needed a huge amount of data (X-rays). Liao and her team turned to X-ray images that were available for the public. Using over 300,000 of X-ray images and their corresponding reports, they trained the model and developed the severity levels that all radiologists agreed on.

Liao's colleague Geeticka Chauhan was responsible for teaching the model read text in the reports that often contained short sentences. The other challenge was the tone and terminologies of the corresponding reports. Different radiologists use different tones and terminologies and that had to make sense for the tool. Thus, researchers had developed a set of linguistic rules for the tool so that it could analyze reports consistently.

The other challenge was training the model to read X-rays and corresponding report texts. However, success was almost instant. The AI model could successfully determine by reading the X-rays and reports even in those which weren't labeled for edema.

"Our model can turn both images and text into compact numerical abstractions from which an interpretation can be derived. We trained it to minimize the difference between the representations of the X-ray images and the text of the radiology reports, using the reports to improve the image interpretation," said Chauhan.

Other Areas That It Can Help

The AI model can also be applied to other medical diagnoses. For example, the existing AI model could be trained the same way to read CT scans that could help diagnose cancer which radiologists might have missed. It can also help in the early detection of Alzheimer's even before signs are completely visible to doctors.

Tanveer Syeda-Mahmood, a researcher for IBM's Medical Sieve Radiology Grand Challenge believes the method can also apply in automated report generation.

"By learning the association between images and their corresponding reports, the method has the potential for a new way of automatic report generation from the detection of image-driven findings. Of course, further experiments would have to be done for this to be broadly applicable to other findings and their fine-grained descriptors," he said.